Whether you’ve been preparing for it or not, the Next Generation 9-1-1 (NG911) migration is under way in the USA as well as in Canada. As you are probably aware, GIS Data will play an important role for dispatch and situation analysis. To ensure seamless flow between systems the National Emergency Number Association (NENA), which has been working on NG911 for several years already, has come up with a GIS Data Model that will act as a data standard for the GIS Data used by the stakeholders.

NG911 GIS Data Model

The data model consists of a list of Required, Strongly Recommended and Recommended layers (or tables). For each of these layers, a set of Mandatory, Conditional or Optional attributes have been set as well as the data type expected for each of these attributes. The goal is to facilitate access to the required information and to reduce the risk or difficulty in having to adapt each municipalities’ GIS data to the various users.

Some of the required layers include:

- Some of the required layers include:

- Road Centerlines

- Site or Structure Address Points

- PSAP Boundary

- Etc.

How Can FME Help?

In many ways of course! As you know FME can easily work with Attribute Names, Values and of course, geometries or geospatial entities. For the purpose of the subject, we’ll be giving examples on how to work on these 3 items that will help you prepare and validate your GIS data for NG911 standards:

- Attribute Mapping

- Data validation

- Spatial Validation

The best of all, is that once your validation tests are configured in a workspace (or multiple ones), you will be able to re-run your workspaces multiple times to assess your data quality, without having to re-create the tests each time!

Attribute Mapping

Attribute Mapping consist of renaming the attributes of your current dataset to the expected names in the NENA Standard. Most of the required fields are expected to contain a specific information, which is why a 1:1 mapping can take place for many of the fields. Many Transformers can help you in this matter, such as the AttributeRenamer, AttributeManager and the SchemaMapper.

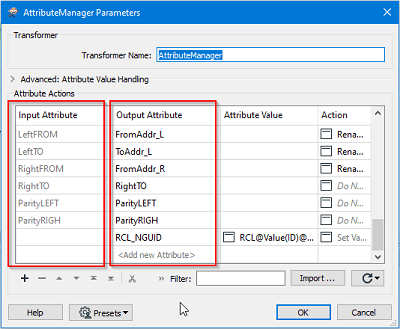

Below, the AttributeManager is renaming some of the source attributes to the NENA Standard.

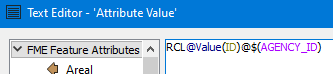

The RCL_NGUID represent the Road Centerline NENA Globally Unique identifier. Since this attribute does not exist in the main dataset, it is being built through a concatenation of different information, all combined in the “Attribute Value” section of the transformer.

In this example, we’re concatenating the constant “RCL” with the ID attribute of the dataset “@Value(ID)” also with the constant “@” and a published parameter “$(AGENCY_ID)” which allows the user to input the Agency Identifier at runtime. The end result will look like this:

RLC1182039455@cityname.on.ca

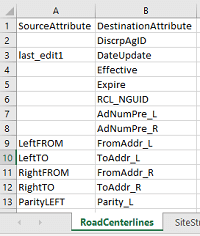

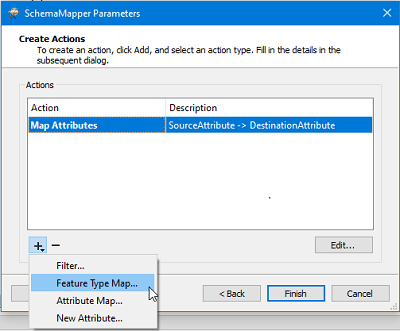

The SchemaMapper Transformer acts differently as it is performing the renaming based on an external schema mapping document or table. For instance, here is a screenshot of an Excel “mapping document” describing the renaming that needs to take place.

Using this file, the SchemaMapper then performs the renaming. Other operations in the SchemaMapper are available, such as “Feature Type Mapping”, which will rename the actual feature type attribute of the feature.

Data Validation

Once the attributes have been mapped correctly to the data standard, it is also useful to check for the expected data types. Some of the field are expecting Printable ASCII characters (P), or a DateTime field (D) or a non-negative integer (N). Other types are also specified in the official GIS Data Model document from NENA.

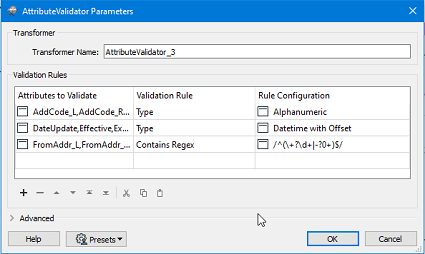

To verify these characteristics, the AttributeValidator and other Tester transformers are your best allies.

The AttributeValidator allows for many tests that will cover most of what can be verified when it comes to an attribute. Such tests are available:

- Type

- Encodable In

- Not Null

- Has a Value

- Contains Regex

- Etc.

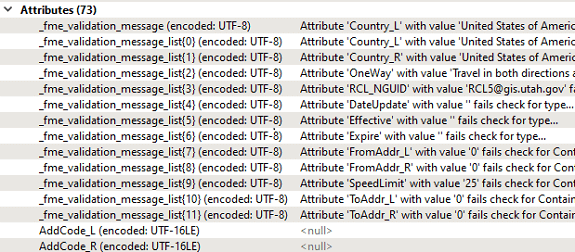

As you can see from the above screenshot, we are testing multiple attributes for various data types using the AttributeValidator. The result will be outputted as a “Passed” or “Failed”, just like the Tester Transformer. If a feature fails multiple tests, the information will be stored in a list attribute called “fme_validation_message_list” that we can see in the Data Inspector, in the “Feature Information” window:

We then need to work with the list to extract all the validation messages separately. It thus requires more knowledge of how list attribute works in FME.

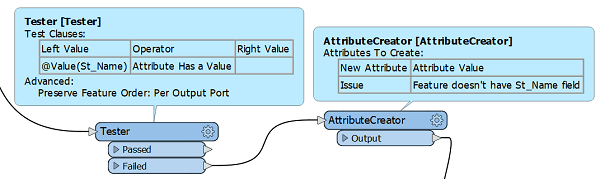

A simpler approach to this is to use “Tester” transformers. The overall validation objectives can be reached using a lot more Transformers, but each test will be controlled by its own set of Transformers, which will allow for a more custom approach. For example, the following sequence tests the attribute “St_Name” to verify if it has a value and if not, we’re creating an attribute called “Issue” with a custom value that will act as the error message.

This allows for a bit more flexibility but will require a specific and custom test for each of the attributes and the characteristic that needs to be validated for each of them. Which will result in a larger workspace and additional Transformer manipulation.

Spatial Validation

Spatial validation could be a whole class of it own as it can require a very specific set of rules to be checked against. Without diving too much into details, here is a list of useful Transformers and their expected usage that you could use to get started on verifying your geospatial entities.

| Transformer Name | Usage |

|

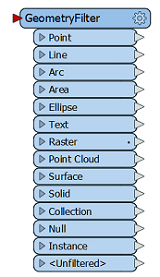

GeometryFilter |

Check for geometry type. Returns an output port for each type of geometry that is being selected and that FME can recognize (point, line, polygon/area, surface, raster, etc.). |

|

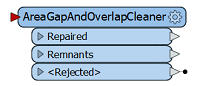

AreaGapAndOverlapCleaner |

Fixes area topologies by repairing gaps and/or overlaps on adjacent areas. |

|

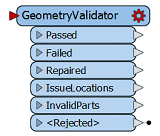

GeometryValidator |

Verifies the geometry integrity of the features going through (ex: self-intersecting polygon). Also attempts in repairing them if possible. |

|

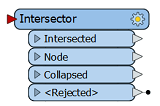

Intersector |

Adds nodes when lines and/or polygons are intersecting. |

FME offers many tools that will help organizations prepare their GIS data for the Next Generation 9-1-1 system.

It can act on multiple levels, such as Attribute Mapping, Data Validation and Spatial Validation. While some effort will be required to build the required tests for data quality assessment, the good news is that once the workflow is defined, you will be able to re-use it as much as you want in an iterative matter with the data cleanup efforts. To make the whole process even better, FME has tools that can allow us to build very nice reports, in any format that FME can handle!

Need help with preparing your organization for NextGen 911 standards?